Content-Addressable Memory Introduction

This article is a brief introduction to content-addressable memory (CAM). For a more comprehensive introduction, please see my paper titled: Content-addressable memory (CAM) circuits and architectures: A tutorial and survey.

Content-addressable memories (CAMs) are hardware search engines that are much faster than algorithmic approaches for search-intensive applications. CAMs are composed of conventional semiconductor memory (usually SRAM) with added comparison circuitry that enable a search operation to complete in a single clock cycle. The two most common search-intensive tasks that use CAMs are packet forwarding and packet classification in Internet routers. I introduce CAM architecture and circuits by first describing the application of address lookup in Internet routers. Then we describe how to implement this lookup function with CAM.

CAM Application: Router Address Lookup

Internet routers forward data packets from an incoming port using an address lookup function [RSBD01]. The address lookup function examines the packet's destination address and chooses an output port associated with that address. The router's list of destination addresses and their corresponding output ports is called the routing table. An example of a simplified routing table is displayed in Table 1. All four entries in the table are 5-bit words, with the don't care bit, X, matching both a 0 and a 1 in that position. Because of the X bits, the first three entries in Table 1 represent a range of input addresses, i.e. the entry on Line 1 indicates that all addresses in the range of 101002—101112 are forwarded to port A. The router searches for the destination address of each incoming packet in the address lookup table to find the appropriate output port. For example, if the router receives a packet with the incoming address 01101, the address lookup matches both Line 2 and Line 3 in the table. Line 2 is selected since it has the most defined bits, indicating it is the most direct route to the destination. This lookup style is called longest-prefix matching and is required to implement the most recent Internet Protocol (IP) networking standard.

| Line No. | Address (Binary) | Output Port | ||

| 1 | 101XX | A | ||

| 2 | 0110X | B | ||

| 3 | 011XX | C | ||

| 4 | 10011 | D | ||

Table 1: Simplified routing table.

The routing parameters that determine the complexity of the implementation are the entry size, the table size, the search rate, and the table update rate. Present IPv4 addresses are 32 bits long and proposed IPv6 addresses are 128 bits long. Ancillary information like the source address and quality-of-service (QoS) information can balloon IPv6 routing table entry sizes to 288—576 bits. Currently, routing table sizes are about 30,000 entries but are growing rapidly. Terabit-class routers must perform hundreds of millions of searches per second in addition to thousands of routing table updates per second [RSBD01].

There are many software-based methods to implement the address lookup function [RSBD01], although not all can meet the above requirements. For example, software-based binary searching accomplishes the task if the lookup table is ordered. Binary searching has O(log n) time complexity in addition to the extra time required to insert a new entry in the table. Almost all algorithmic approaches are too slow to keep up with projected routing requirements. In contrast, CAMs use hardware to complete a search in a single cycle, resulting in constant O(1) time complexity. This is accomplished by adding comparison circuitry to every cell of hardware memory. The result is a fast, massively parallel lookup engine. The strength of CAMs over algorithmic approaches is their high search throughput. The current bottleneck is the large power consumption due to the large amount of comparison circuitry activated in parallel. Reducing the power consumption is a key aim of current CAM research.

Content-Addressable Memory

The remainder of this introdcution assumes you have some familiarity with the operation of transistors and basic ciruit organization of random-access memory (RAM).

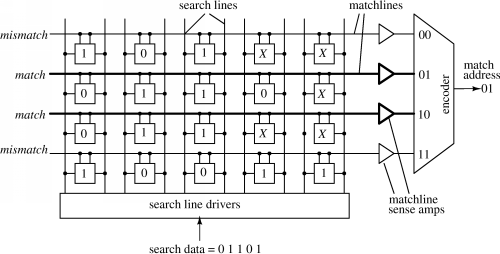

There are two basic forms of CAM: binary and ternary. Binary CAMs support storage and searching of binary bits, zero or one (0,1). Ternary CAMs support storing of zero, one, or don't care bit (0,1,X). Ternary CAMs are presently the dominant CAM since longest-prefix routing is the Internet standard. Figure 1 shows a block diagram of a simplified 4 x 5 bit ternary CAM with a NOR-based architecture. The CAM contains the routing table from Table 1 to illustrate how a CAM implements address lookup. The CAM core cells are arranged into four horizontal words, each five bits long. Core cells contain both storage and comparison circuitry. The search lines run vertically in the figure and broadcast the search data to the CAM cells. The matchlines run horizontally across the array and indicate whether the search data matches the row's word. An activated matchline indicates a match and a deactivated matchline indicates a non-match, called a mismatch in the CAM literature. The matchlines are inputs to an encoder that generates the address corresponding to the match location.

Figure 1: NOR-based CAM architecture (adapted from [Sch96])

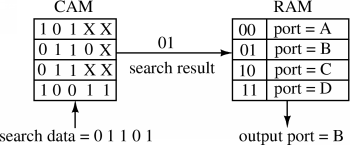

A CAM search operation begins with precharging all matchlines high, putting them all temporarily in the match state. Next, the search line drivers broadcast the search data, 01101 in the figure, onto the search lines. Then each CAM core cell compares its stored bit against the bit on its corresponding search lines. Cells with matching data do not affect the matchline but cells with a mismatch pull down the matchline. Cells storing an X operate as if a match has occurred. The aggregate result is that matchlines are pulled down for any word that has at least one mismatch. All other matchlines remain activated (precharged high). In the figure, the two middle matchlines remain activated, indicating a match, while the other matchlines discharge to ground, indicating a mismatch. Last, the encoder generates the search address location of the matching data. In the example, the encoder selects numerically the smallest numbered matchline of the two activated matchlines, generating the match address 01. This match address is used as the input address to a RAM that contains a list of output ports as depicted in Figure 2. This CAM/RAM system is a complete implementation of an address lookup engine. The match address output of the CAM is in fact a pointer used to retrieve associated data from the RAM. In this case the associated data is the output port. The CAM/RAM search can be viewed as a dictionary lookup where the search data is the word to be queried and the RAM contains the word definitions. With this sketch of CAM operation, we now look at the comparison circuitry in the CAM core cells.

Figure 2: Address lookup with CAM/RAM.

CAM Circuits

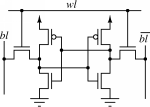

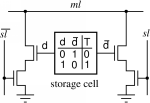

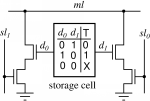

Figure 3(a) displays a conventional SRAM core cell that stores data using positive feedback in back-to-back inverters. Two access transistors connect the bitlines, bl and /bl (we use the prefix / to denote the logical complement in the text and we use an overbar in the figures), to the storage nodes under control of the wordline, wl. Data can be read from the cell or written into the cell through the bitlines. We use this differential cell as the storage for building CAM cells. Figure 3(b) depicts a conventional binary CAM (BCAM) cell with the matchline denoted ml and the differential search lines denoted sl and /sl. The figure also lists the truth value, T, stored in the cell based on the values of d and d. Read and write access circuitry is omitted for clarity in this figure and subsequent CAM core cell figures. For a binary CAM, we store a single bit differentially. The comparison circuitry attached to the storage cell performs a comparison between the data on the search lines (sl and /sl) and the data in the binary cell with an XNOR operation (ml = ! (d XOR sl)). A mismatch in a cell creates a path to ground from the matchline through one of the series transistor pairs. A match of d and sl disconnects the matchline from ground.

(a) 6-transistor SRAM cell.

(b) Binary CAM cell.

(c) Ternary CAM cell

Figure 3: Conventional SRAM storage cell, binary CAM and ternary CAM cells. The CAM cells omit the read/write access circuitry for clarity.

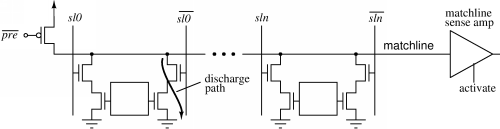

A multi-bit CAM word is a row of adjacent cells created by connecting the cells' matchlines. Figure 4 depicts the relevant matchline circuitry of a single CAM row from Figure 1. Just like a NOR gate pull down network in CMOS logic, the discharge paths on the matchline are all connected parallel giving it the name NOR-based CAM. The classic matchline sensing scheme precharges the matchline high and then asserts the search lines, sl0, /sl0, ..., sln, /sln. A mismatch of any of the bits on the matchline discharges the matchline; an example discharge path is shown in the figure. A match results in the matchline remaining in the precharge state which occurs if all bits in a word match.

Figure 3(c) shows a ternary CAM (TCAM) cell. The TCAM cell stores an extra state compared to the binary CAM, the don't care state, labeled X, which necessitates two independent bits of storage. When a don't care is stored in the cell, a match occurs for that bit regardless of the search data. The figure shows that the TCAM cell stores X when d0 = d1 = 0. The state d0= d1= 1 is undefined and is never used.

Figure 4: The matchline of a NOR-based CAM.

In typical use, a CAM has only one or a small number of matches and most words mismatch. Since mismatches dominate, most matchlines transition both during precharge and during evaluation. This leads to a high power consumption on the matchlines. Further, the search-lines, which broadcast the data to the CAM cells are highly capacitive. The search-lines are another large source of power dissipation in CAM. Because of these large sources of power dissipation, recent research in CAM design focuses on circuit techniques for reducing power consumption.

For further details about CAM circuits, see my paper titled: Content-addressable memory (CAM) circuits and architectures: A tutorial and survey which appears in the IEEE Journal of Solid-State Circuits. My list of academic papers in CAM design is available on the CAM References page.

The two most common search-intensive tasks that use CAMs are packet forwarding [PZ92] and packet classification in Internet routers. Other applications where CAMs are used include processor caches, translation lookaside buffers (TLB), data compression applications, database accelerators, and neural networks [CD89], [Rob92], [Sta93], [Azg99]. Finally, reference [Azg99] is good, brief online introduction to CAM applications in the area of networking (many of the applications discussed are also used outside networking applications).

References

(see also CAM References page)[Azg99] Sherri Azgomi. Using content-addressable memory for networking applications, http://www.commsdesign.com/main/1999/11/9911feat3.htm (page now defunct, but archived in the Web Archive), 1999.

[CD89] Lawrence Chisvin and R. James Duckworth. Content-addressable and associative memory: Alternatives to the ubiquitous RAM. IEEE Computer, 22(7):51 64, July 1989.

[PZ92] Tong-Bi Pei and Charles Zukowski. Putting routing tables in silicon. IEEE Network Magazine, 6(1):42 50, January 1992.

[Rob92] Ian N. Robinson. Pattern-addressable memory. IEEE Micro, 12(3):20 30, June 1992.

[RSBD01] Miguel Ruiz-Sanchez, Ernst W. Biersack, and Walid Dabbous. A survey and taxonomy of IP lookup algorithms. IEEE Network, 15(2):8 23, March-April 2001.

[Sch96] Kenneth James Schultz. CAM-Based Circuits for ATM Switching Networks. PhD thesis, University of Toronto, 1996.

[Sta93] Steve Stas. Associative processing with CAMs. In Northcon/93 Conference Record, pages 161 167, 1993.